|

Using what I learnt from my offline confusion network experiments, I built a user interface to support users reviewing and correcting a recognition. The UI was designed with a small display and tablet-based (stylus) input device in mind.

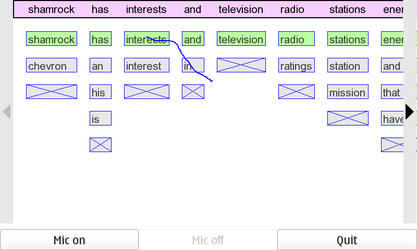

As shown in the above screenshot, the 1-best recognition result is shown at the top in a purple box. The likely word alternates in each confusion network cluster are display below with the currently selected word highlighted in green. Each cluster also contains an "X" box which is used to indicate no word should be used for that cluster. Between each word cluster, there is a small divider gap. This gap can be used to create a new cluster between existing ones (e.g. inserting industries between shamrock and has).

Selection is done by tapping on the word boxes or by stroking through one or more words. Strokes which intersects more than one word in a particular cluster are treated as invalid. A blue trail of the current stylus stroke is shown and is erased when the stylus is released.

Arrow buttons are displayed on the left and right sides. These buttons allow the user to scroll through the recognition result as the result is often wider than the display. Users activate the buttons either by tapping, or by "tickling" the button by holding the stylus down and moving it around.

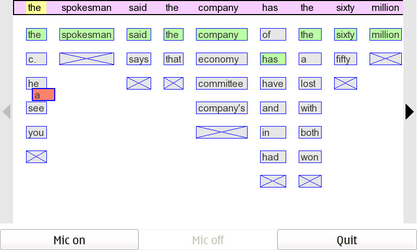

To copy a word between clusters, the user must press on a word and hold the stylus down for a second. The word then gets stuck to the stylus location, and the user can drag it around to their target destination. During the dragging, the current target cluster is designated by highlighting the best word at the top of the display in yellow. During copying, dragging over either scroll button will cause the display to scroll while continuing the copy operation. If a word is copied to a cluster already containing the same word, the copied word is simply selected in that cluster.

| Non-Confusion Network Corrections |

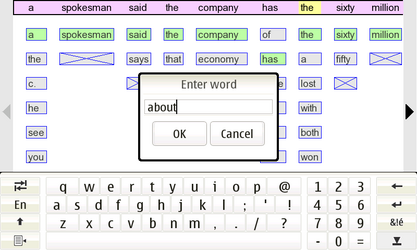

In the above example, the user wants about between has and sixty. But this word did not appear as a possible alternative. The user can tap on any of the words in the purple box (including the divider gaps) to initiate a correction. This brings up a text box in which the user can enter text using any standard Nokia method such as the onscreen keyboard. During the correction, the target cluster is highlighted in yellow.

The current result is cleared and audio is started streaming to the recognizer by using the Mic on button. After the user finishes speaking, the Mic off button is hit. A animated progress bar is then display while the user waits for the recognition to complete (usually only a few seconds). The UI supports both normal and fullscreen window sizes via the standard hardware button. A Quit button is provided to allow application exit.

Here are my final set of libraries, binaries, configuration files, models, etc. that I used for this demo. I have no idea whether this will work on anybody else's device. If you want to try, be prepared to do some fiddling, this is by no means a simple Debian-package-like installation.

|

Libraries

|

ARMEL binary libraries for SphinxBase, PocketSphinx, Fortran (needed to make Sphinx work for some reason), and my own shared libraries for recognition and visualization support. Unzip to /usr/lib on the device, the zip contains redundant copies of things that really could be symbolic links.

|

|

Binaries and config files

|

Contains the binary executable and associated flat-text configuration files. Put this wherever you like, I used /home/user. Make sure permissions are set so non-root user can use them. The speechcn executable takes a single command line parameter of the configuration file to load. I used the Personal Menu add-on to create my launch icons for the UI.

|

|

Acoustic and Language Model

|

US English speaker independent narrowband acoustic model and 5K language model. The 5k_si_3gram.cfg file needs to point to where these are living. I stored them on the internal memory card at /media/mmc2. There are other models scattered around my site. Be sure to use a narrowband acoustic model.

|

|